Status Quo?

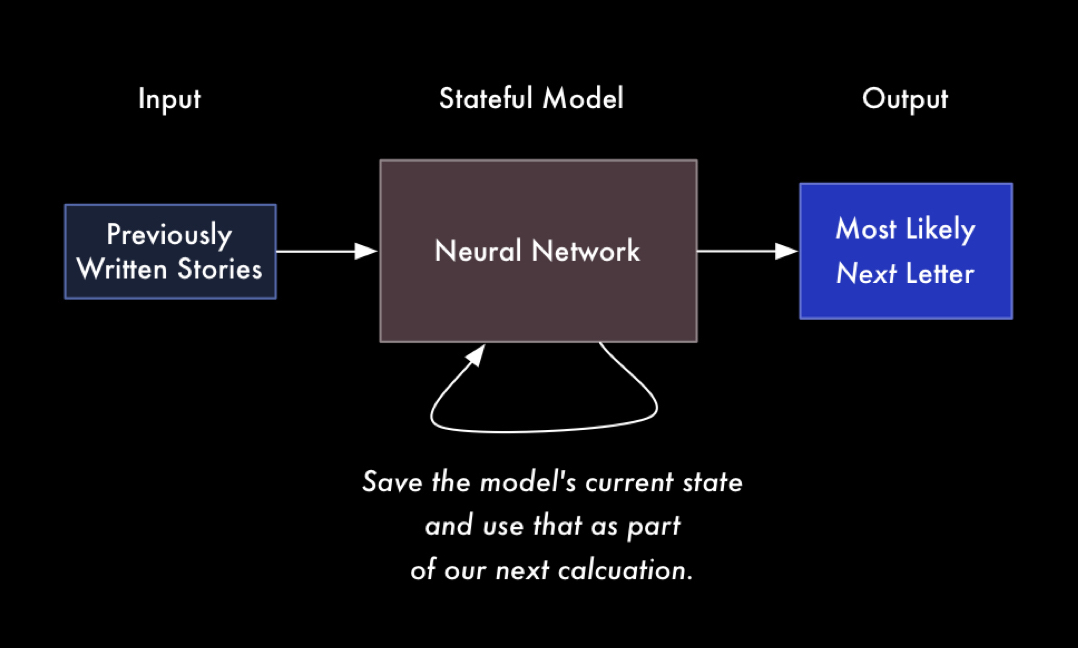

1. Recurrent Neural Networks

Robert Cohn was once middleweight boxi…

What letter is going to come next?

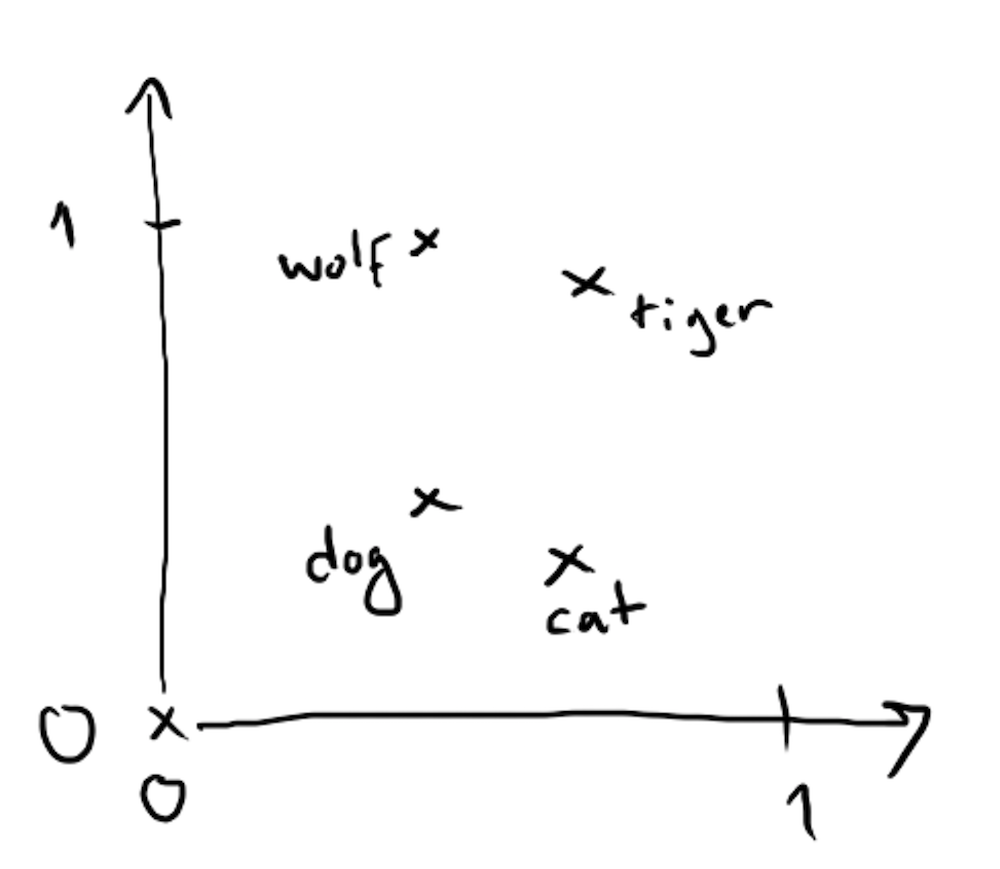

Word Embedding Space

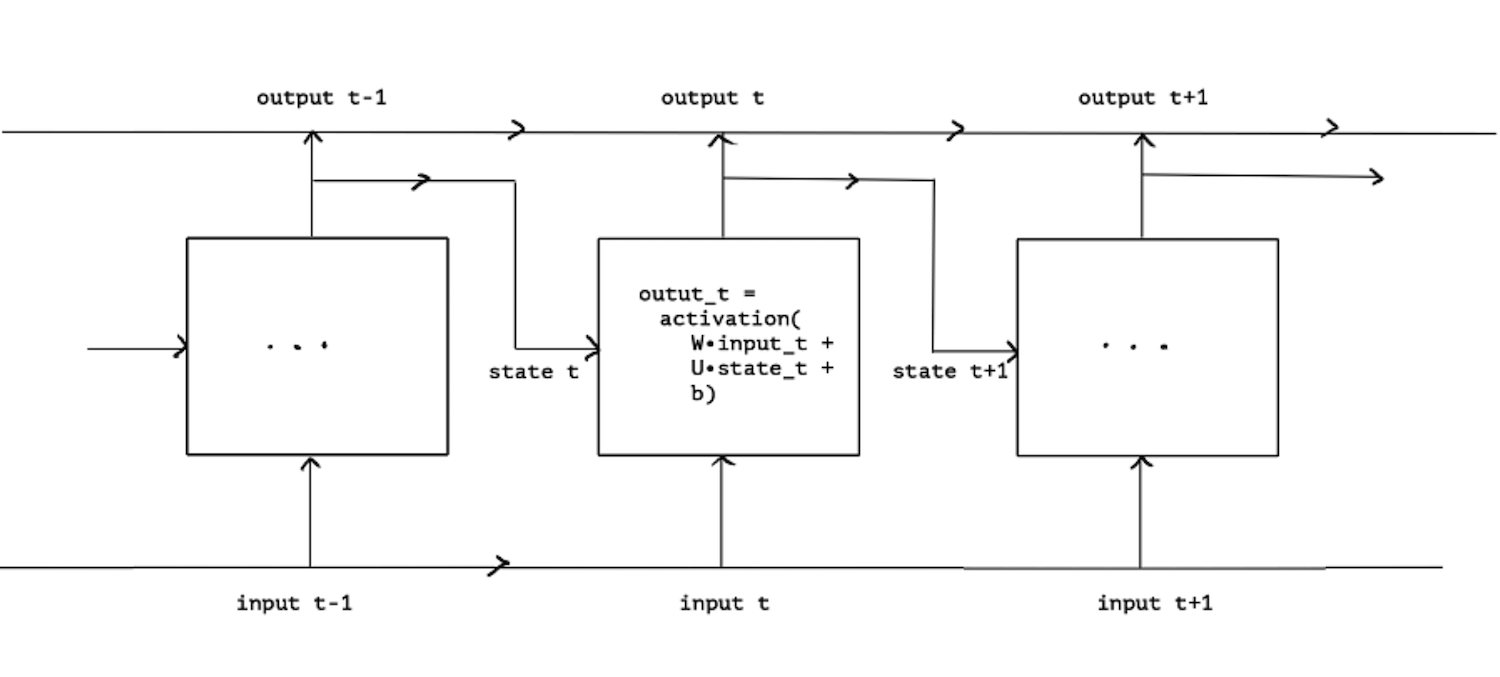

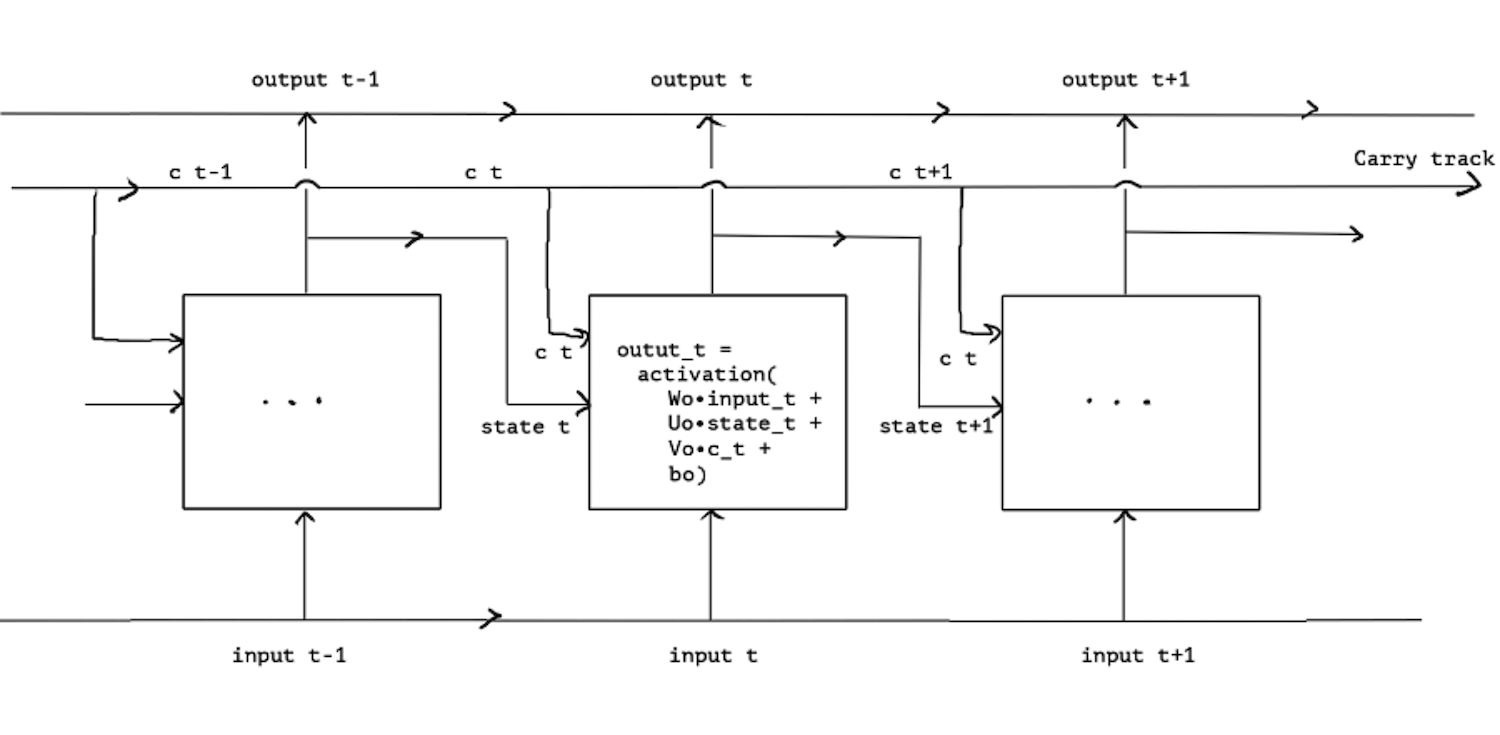

RNN Structure

LSTM / GRU Structure

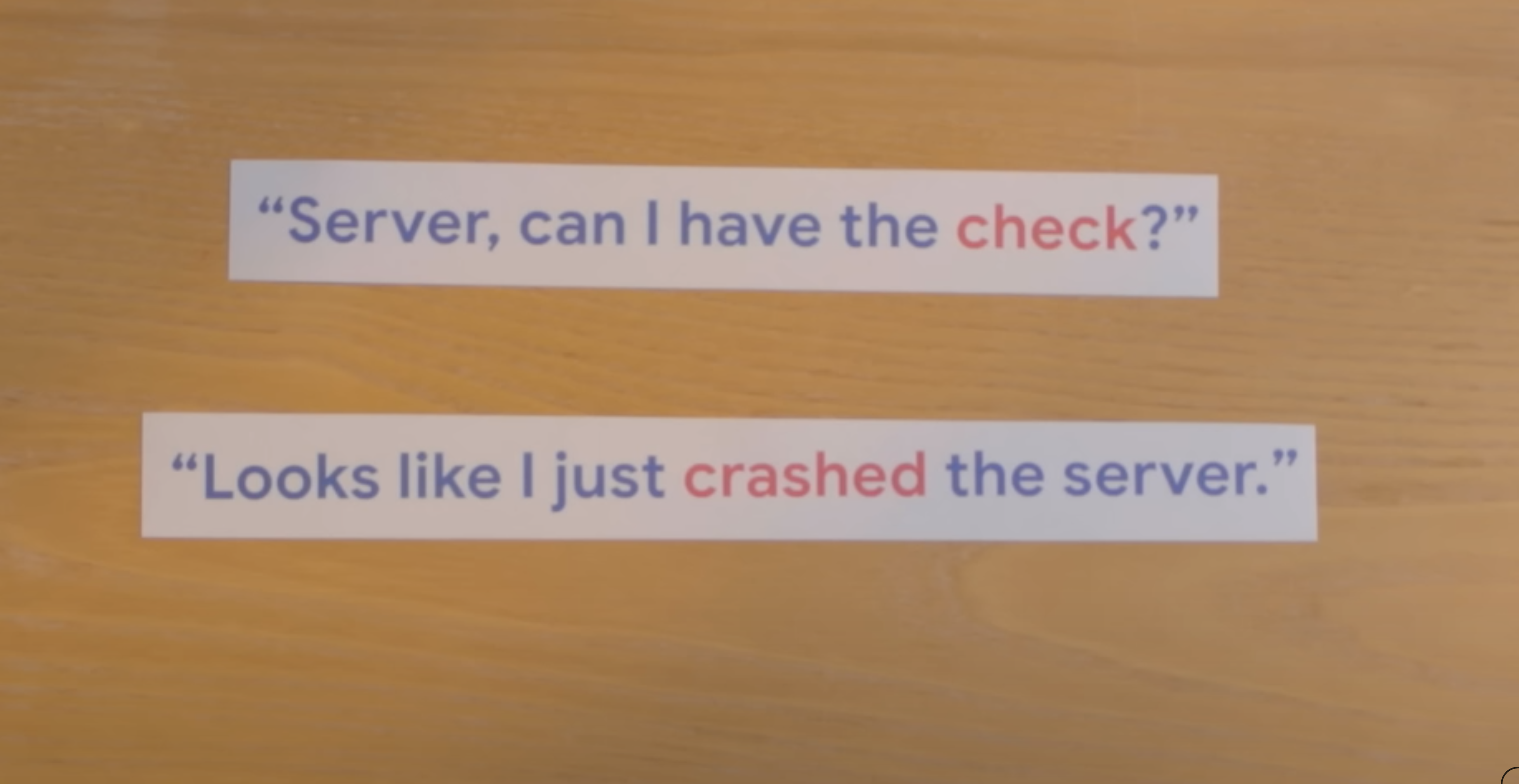

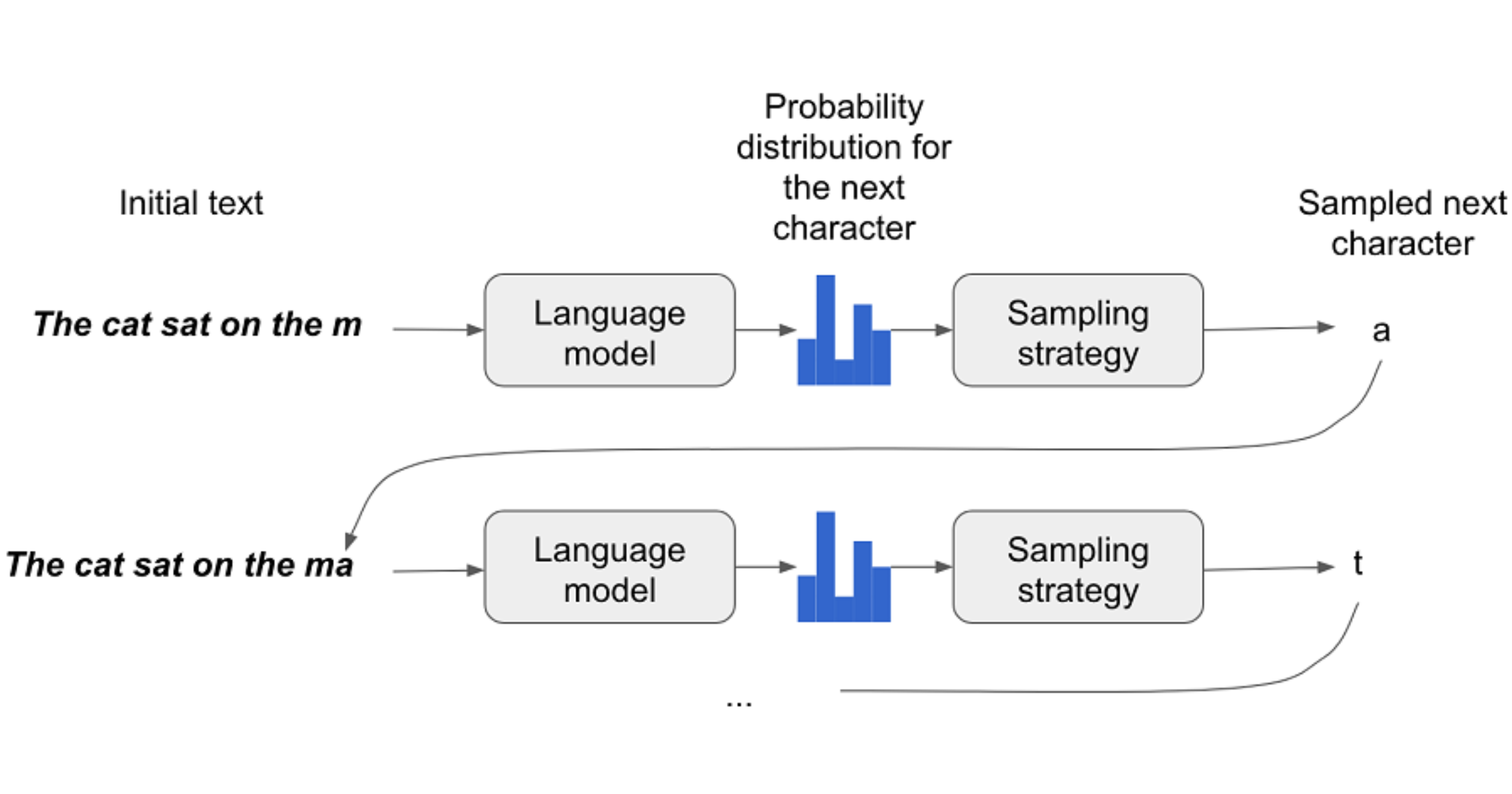

How can we generate sequence data?

2. Transformers

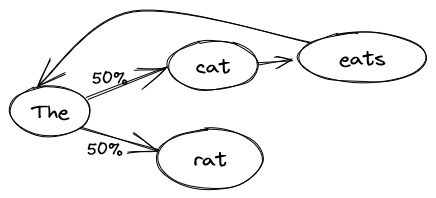

A probabilistic approach

Positional Encoding

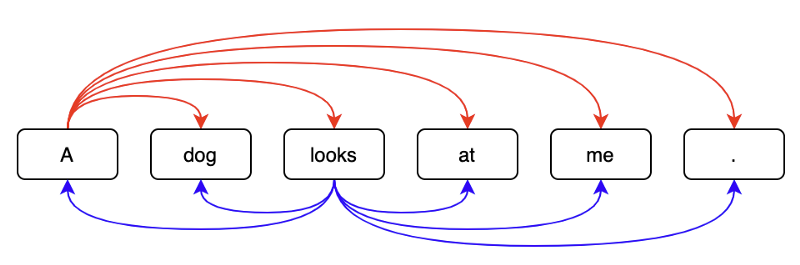

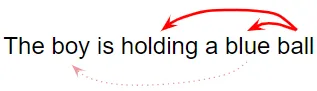

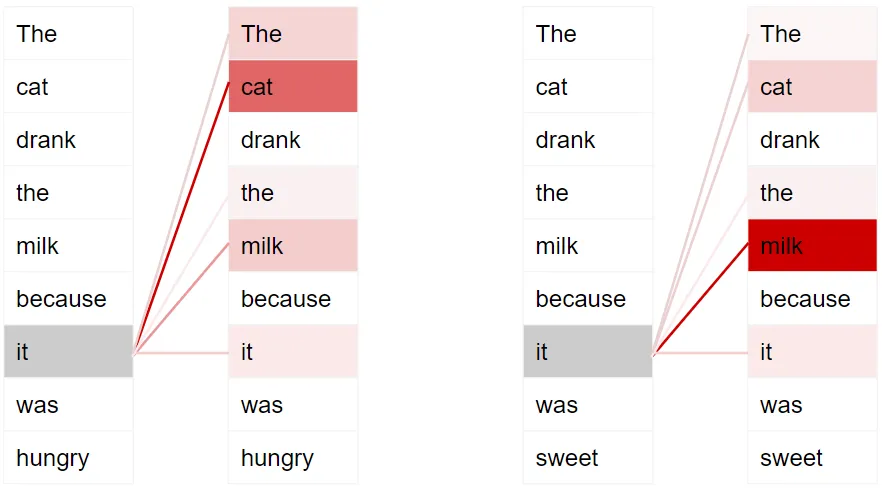

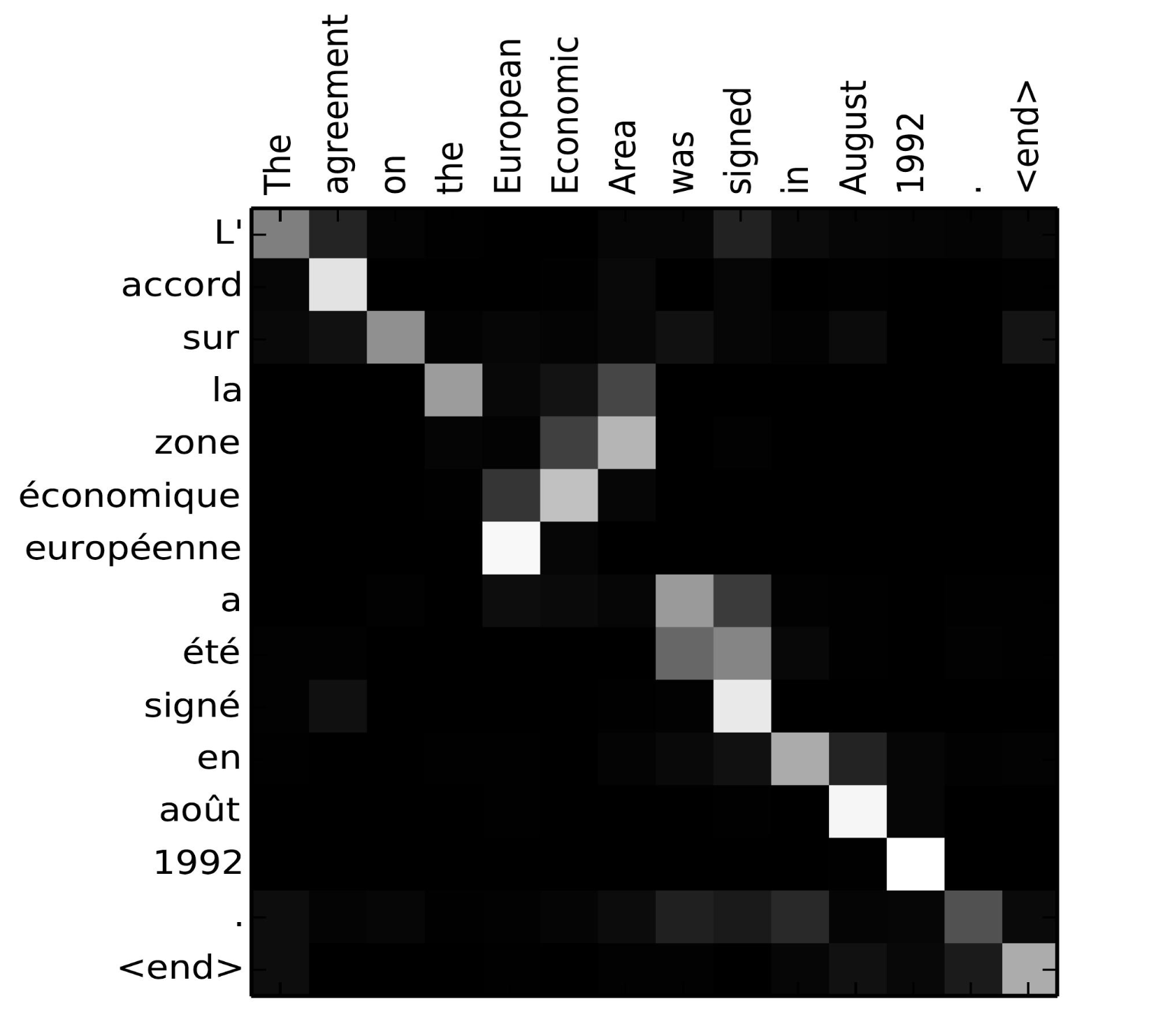

Attention

Self Attention